Creating gRPC+HTTP Servers Based on SQL

Why choose gRPC+HTTP hybrid services?

Stronger compatibility

- Browser support: HTTP is the foundation of web applications, allowing direct browser access, while gRPC requires additional client library support.

- Existing ecosystem: Many existing tools, libraries, and frameworks are based on the HTTP protocol. Combining HTTP with gRPC allows for better integration with existing systems.

Higher flexibility

- Multiple transport protocols: You can choose the appropriate transport protocol based on specific needs. gRPC can be used for scenarios requiring high performance, while HTTP can be used for scenarios requiring high compatibility.

- Multiple data formats: Supports multiple data formats such as JSON, XML, and Protocol Buffers to meet the needs of different clients.

Easier to extend

- Gradual migration: You can gradually migrate to gRPC while maintaining existing HTTP services, avoiding the risks associated with large-scale system replacement.

- Hybrid architecture: Simultaneously supports gRPC and HTTP clients to serve different use cases.

Simpler architecture

- Compared to the gRPC-Gateway + gRPC architecture, the HTTP + gRPC hybrid architecture only requires one service to support both protocols simultaneously, reducing development and maintenance complexity.

By integrating HTTP and gRPC, you can build more flexible and compatible microservice systems to adapt to changing business needs and technical scenarios.

Create gRPC+HTTP servers based on sql provides a complete gRPC+HTTP hybrid backend service solution from development to deployment. The core feature of this solution is: developers only need to connect to a database to automatically generate standardized CRUD API interfaces, without writing any Go language code, achieving "low-code development" for gRPC services.

Tips

Another gRPC service development solution, Create gRPC+HTTP servers based on protobuf (see Based on Protobuf), allows choosing to use built-in ORM components or custom ORM components, while this solution only uses built-in ORM components. This is the biggest difference between the two solutions.

Applicable scenarios: Suitable for developing gRPC+HTTP hybrid backend service projects primarily featuring standardized CRUD APIs.

Prerequisites

Sponge supports database types mysql, mongodb, postgresql, sqlite. The following operations use mysql as an example to introduce the steps for developing gRPC+HTTP hybrid services. The development steps for other database types are the same.

Environment requirements:

- sponge is installed

- mysql database service

- Database table structure

Tips

Code generation requires a mysql service and database tables. You can start a mysql service using the docker script, and then import the example SQL.

To quickly get familiar with the basic syntax rules of Protobuf, please refer to the Protobuf documentation.

Create gRPC+HTTP Server

Operation Steps

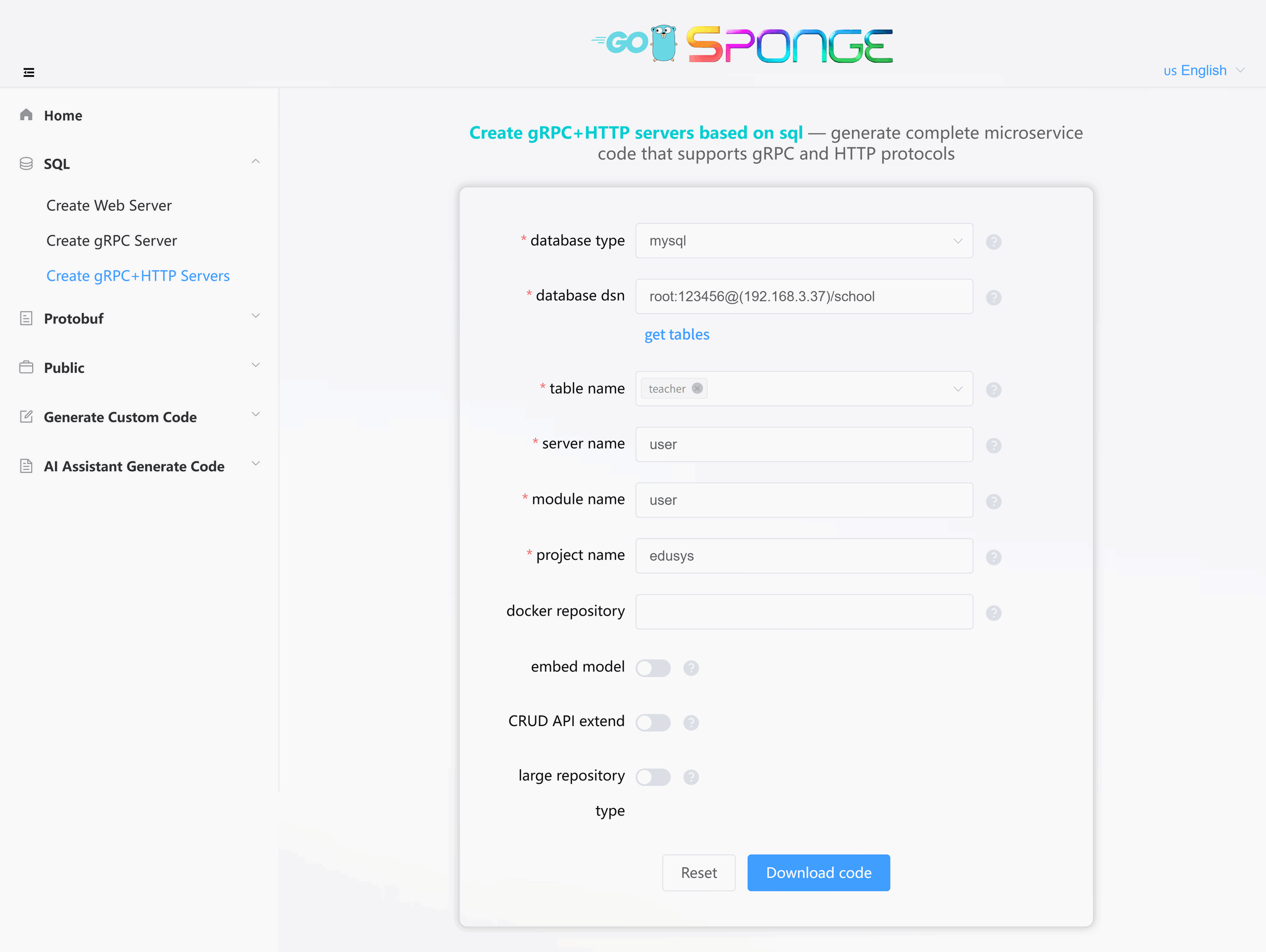

Execute the command sponge run in the terminal to enter the code generation UI:

- Click the left menu bar [Protobuf] → [Create gRPC+HTTP Server];

- Select proto files (multiple selection is allowed);

- Then fill in other parameters. Hover your mouse over the question mark

?to view parameter descriptions.

Tips

If you enable the Monorepo Type option when filling in parameters, you must keep this setting for all subsequent relevant code generation.

After filling in the parameters, click the button Download Code to generate the gRPC+HTTP service project code, as shown in the figure below:

Equivalent Command

sponge micro grpc-http --module-name=user --server-name=user --project-name=edusys --db-driver=mysql --db-dsn="root:123456@(192.168.3.37:3306)/school" --db-table=teacherTips

The generated gRPC+HTTP hybrid service code directory is named by default in the format

service-name-type-time. You can modify the directory name according to your actual needs.The system will automatically save the records of successfully generated code. When you generate code again, if the

Database DSNremains unchanged, the available table list will be automatically loaded after the page refreshes or is reopened, without needing to manually click theGet Table Namesbutton.

Directory Structure

.

├─ api # API protocol definition directory (proto/OpenAPI, etc.)

│ └─ user # Service name (user service)

│ └─ v1 # API version v1 (stores proto files and generated pb.go files, etc.)

├─ cmd # Application entry directory

│ └─ user # Service name (user service)

│ ├─ initial # Initialization logic (e.g., config loading, service setup, etc.)

│ └─ main.go # Main program entry file

├─ configs # Configuration file directory (YAML format config templates)

├─ deployments # Deployment-related files

│ ├─ binary # Binary deployment scripts/configs

│ ├─ docker-compose # Docker Compose orchestration files

│ └─ kubernetes # Kubernetes deployment manifests

├─ docs # Project documentation (API docs, design docs, etc.)

├─ internal # Internal implementation code (not exposed externally)

│ ├─ cache # Cache-related implementations (Redis or local in-memory cache wrappers)

│ ├─ config # Configuration parsing and struct definitions

│ ├─ dao # Data Access Layer (Database Access Object)

│ ├─ ecode # Error code definitions

│ ├─ handler # Business logic handling layer (similar to Controller)

│ ├─ model # Data models/entity definitions

│ ├─ routers # Route definitions and middleware

│ ├─ server # Service startup and lifecycle management

│ └─ service # Business logic layer

├─ scripts # Utility scripts (e.g., code generation, build, run, deploy, etc.)

├─ third_party # Third-party Protobuf dependencies/tools

├─ go.mod # Go module definition file (declares dependencies)

├─ go.sum # Go module checksum file (auto-generated)

├─ Makefile # Project build automation script

└─ README.md # Project description documentCode Call Chain Description:

gRPC main call chain:

cmd/user/main.go→internal/server/grpc.go→internal/service→internal/dao→internal/modelHTTP main call chain:

cmd/user/main.go→internal/server/http.go→internal/routers/router.go→internal/handler→internal/service→internal/dao→internal/model

Tips

From the call chain, it can be seen that the HTTP call chain shares the business logic layer service with the gRPC call chain, eliminating the need to write two sets of code and protocol conversions.

The service layer is mainly responsible for API handling. If more complex business logic needs to be handled, it is recommended to add an additional business logic layer (such as logic or biz) between the service and dao. For details, please click to view the Code Layered Architecture section.

Code Structure Diagram

The created gRPC+HTTP service code adopts the classic "Egg Model" architecture:

Testing gRPC+HTTP Service API

Unzip the code files, open the terminal, navigate to the gRPC+HTTP service code directory, and execute the commands:

# Generate and merge api related code

make proto

# Compile and run the service

make runmake proto command detailed explanation

Usage suggestions

Execute this command only when the API description in the proto file changes, otherwise skip this command and runmake rundirectly.This command performs the following automated operations in the background

- Generate

*.pb.gofiles - Generate router registration code

- Generate error code definitions

- Generate Swagger documentation

- Generate gRPC client test code

- Generate API template code

- Automatically merge API template code

- Generate

Security mechanism

- Code merging retains existing business logic

- Code is automatically backed up before each merge to:

- Linux/Mac:

/tmp/sponge_merge_backup_code - Windows:

C:\Users\[Username]\AppData\Local\Temp\sponge_merge_backup_code

- Linux/Mac:

Test gRPC API

Testing Method 1: Using an IDE

Open the project

- Load the project using an IDE such as

GolandorVSCode.

- Load the project using an IDE such as

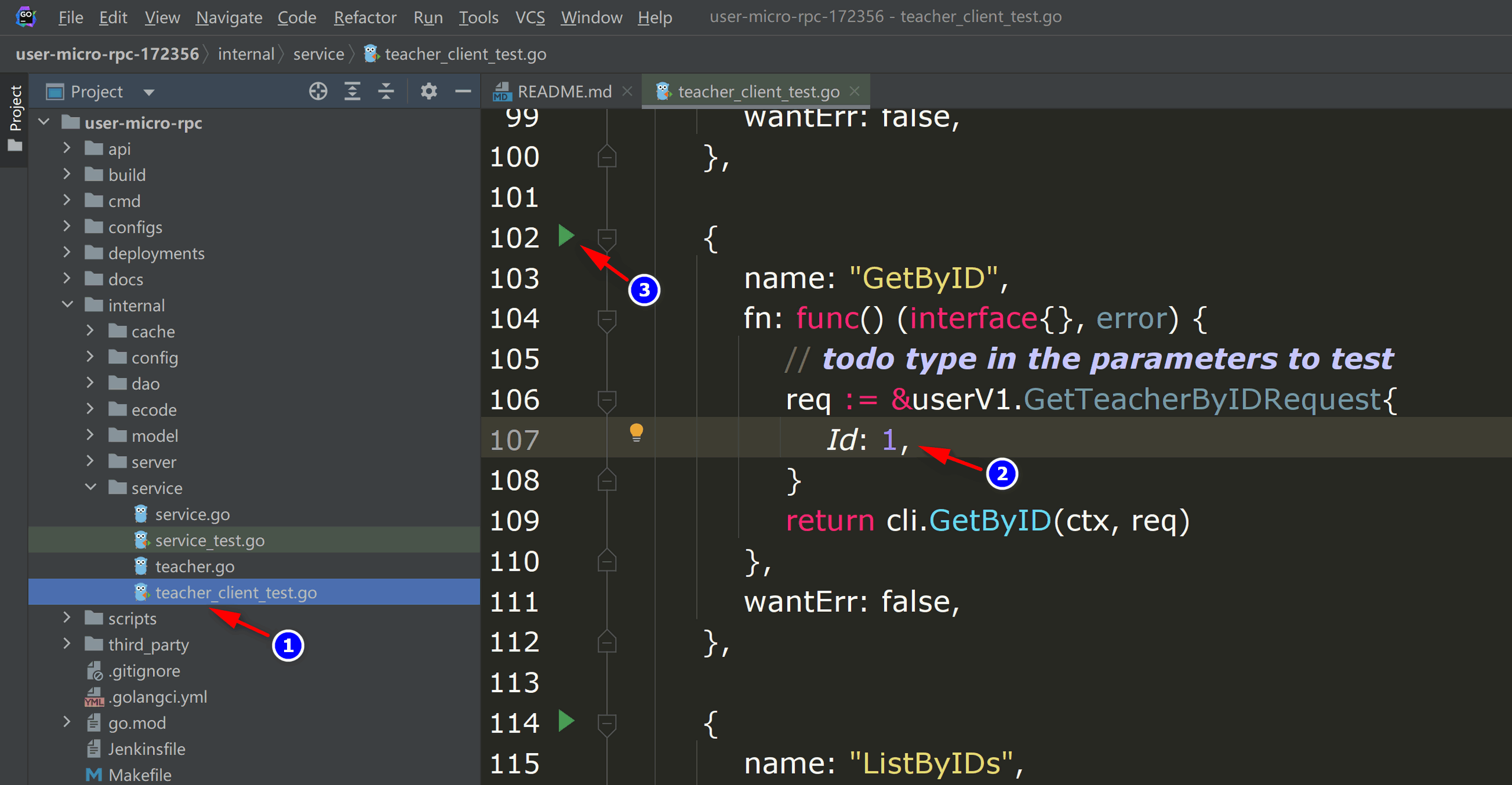

Execute tests

- Navigate to the

internal/servicedirectory and open the file with the_client_test.gosuffix. - The file contains test and benchmark functions for each API defined in the proto.

- Modify request parameters (similar to Swagger UI testing).

- Run tests through the IDE, as shown in the figure below:

micro-rpc-test - Navigate to the

Testing Method 2: Using the Command Line

Enter the directory

cd internal/serviceModify parameters

- Open the

xxx_client_test.gofile - Fill in the request parameters for the gRPC API

- Open the

Execute tests

go test -run "Test_service_teacher_methods/GetByID"Example:

go test -run "Test_service_teacher_methods/GetByID"

Test HTTP API

Access http://localhost:8080/apis/swagger/index.html in your browser to test the http api.

Add CRUD API

If new mysql tables need to have CRUD API code generated, the CRUD API generated by sponge can be seamlessly added to the gRPC+HTTP service code without writing any Go code.

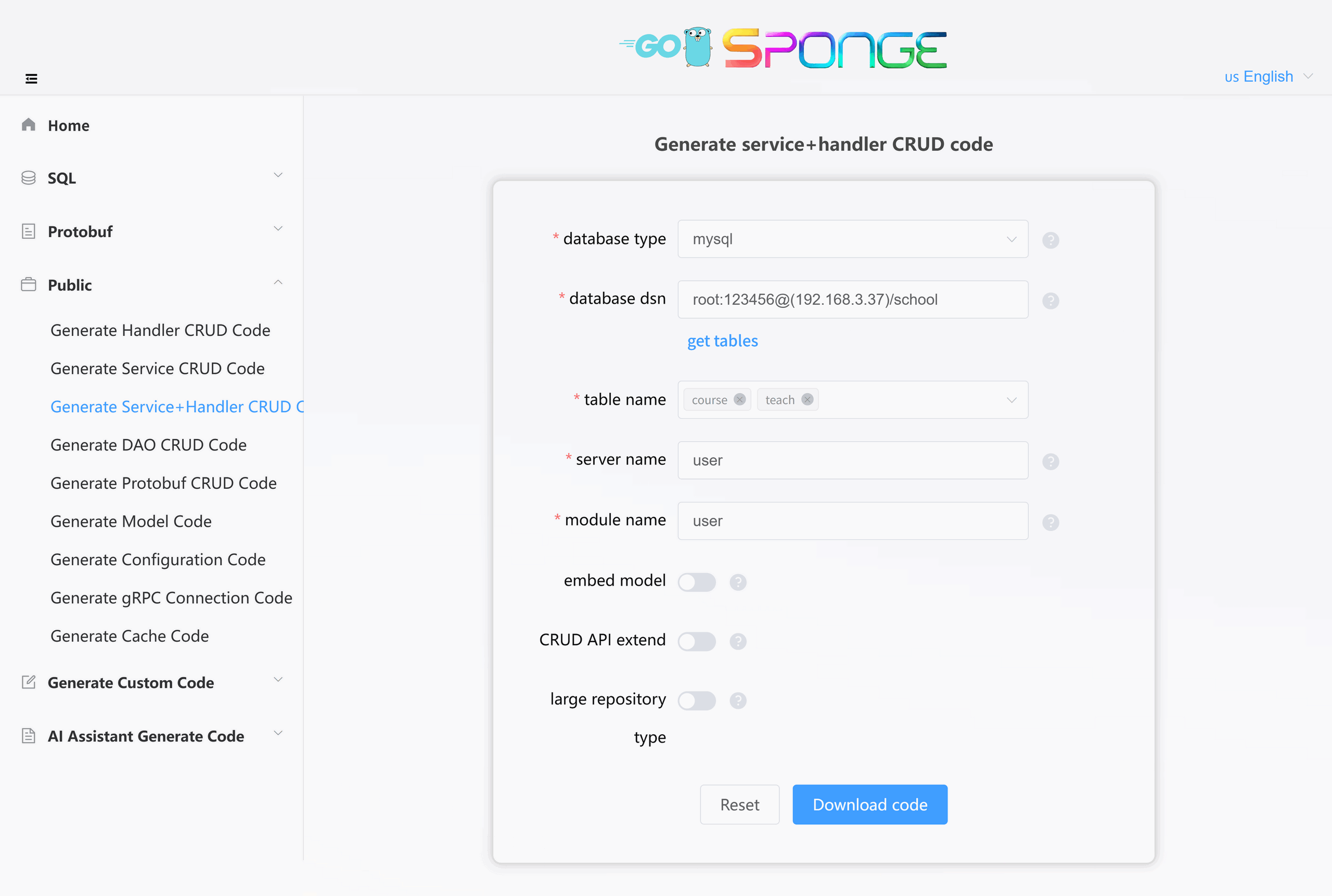

Generate Service+Handler CRUD Code

- Click the left menu bar [Public] → [Generate Service+Handler CRUD Code];

- Select the database

mysql, fill in thedatabase dsn, then click the buttonGet Table Names, and select the mysql tables (multiple selection is allowed); - Fill in other parameters.

After filling in the parameters, click the button Download Code to generate service+handler CRUD code, as shown in the figure below:

Equivalent Command

# Full command

sponge micro service-handler --module-name=user --server-name=user --db-driver=mysql --db-dsn="root:123456@(192.168.3.37:3306)/school" --db-table=course,teach

# Simplified command (use --out to specify the service code directory, generated code is automatically merged into the specified service directory)

sponge micro service-handler --db-driver=mysql --db-dsn="root:123456@(192.168.3.37:3306)/school" --db-table=course,teach --out=userCode Directory Structure

The generated service+handler CRUD code directory is as follows:

.

├─ api

│ └─ user

│ └─ v1

└─ internal

├─ cache

├─ dao

├─ handler

├─ ecode

├─ model

└─ serviceTest CRUD API

Unzip the code, move the internal and api directories to the gRPC+HTTP service code directory, and execute the commands in the terminal:

# Generate and merge api related code

make proto

# Compile and run the service

make runTo test the CRUD API, please refer to the section above: Testing gRPC+HTTP Service API.

Tips

The List interface in CRUD API supports powerful custom conditional pagination query functionality. Click to view the detailed usage rules: Custom Conditional Pagination Query.

Develop Custom API

In a project, there are usually not only standardized CRUD APIs but also custom APIs. sponge adopts a "define and generate" development model, which allows for rapid development of custom APIs, mainly consisting of the following three steps:

Define API

Declare the request/response structure of the API in the.protofileWrite business logic

- Fill the core business logic code in the generated code template

- No need to manually write complete gRPC server/client code

Test and verify

- Test code is automatically generated and can be run directly

- No need to rely on third-party gRPC client tools like Postman

- Test APIs in the built-in Swagger, no need to rely on third-party tools like Postman

The following takes adding a "Bind Phone Number" API as an example to explain the development process in detail.

1. Define API

Navigate to the api/user/v1 directory, open the teacher.proto file, and add the description for the bind phone number interface:

import "validate/validate.proto";

import "tagger/tagger.proto";

service user {

// ...

// Bind phone number, describe the specific implementation logic here to tell the sponge built-in AI assistant to generate business logic code

rpc BindPhoneNum(BindPhoneNumRequest) returns (BindPhoneNumReply) {

option (google.api.http) = {

post: "/api/v1/bindPhoneNum"

body: "*"

};

option (grpc.gateway.protoc_gen_openapiv2.options.openapiv2_operation) = {

summary: "Bind Phone Number",

description: "Bind Phone Number",

};

}

}

message BindPhoneNumRequest {

string phoneNum = 1 [(validate.rules).string.len = 11];

string captcha = 2 [(validate.rules).string.len = 4];

}

message BindPhoneNumReply {}Tips

In the rpc description within the proto file, there are two types of rpc that will be ignored when generating http related code:

google.api.httpis not set in the rpc method.- The rpc method belongs to the

streamtype.

After adding the API description information, execute the command in the terminal:

# Generate and merge api related code

make proto2. Implement Business Logic

There are two ways to implement business logic code:

Manually write business logic code

Open the code file

internal/service/teacher.go, and fill in the specific business logic code in the BindPhoneNum method function.Note

When developing custom APIs, you may need to perform database CRUD or cache operations, which can reuse the following code:

Automatically generate business logic code

Sponge provides a built-in AI assistant to generate business logic code. Click to view the section on AI Assistant generates code.

The business logic code generated by the AI assistant may not fully meet actual requirements and needs to be modified according to the specific situation.

# Compile and run the service

make run3. Test Custom API

After implementing the business logic code, execute the command in the terminal:

# Compile and run the service

make runTo test the custom API, please refer to the section above: Testing gRPC+HTTP Service API.

Cross-Service gRPC API Calls

In a microservice architecture, the current service may need to call APIs provided by other gRPC services. These target services may be implemented in different languages, but they must all use the Protocol Buffers protocol. The following is a complete description of the call process:

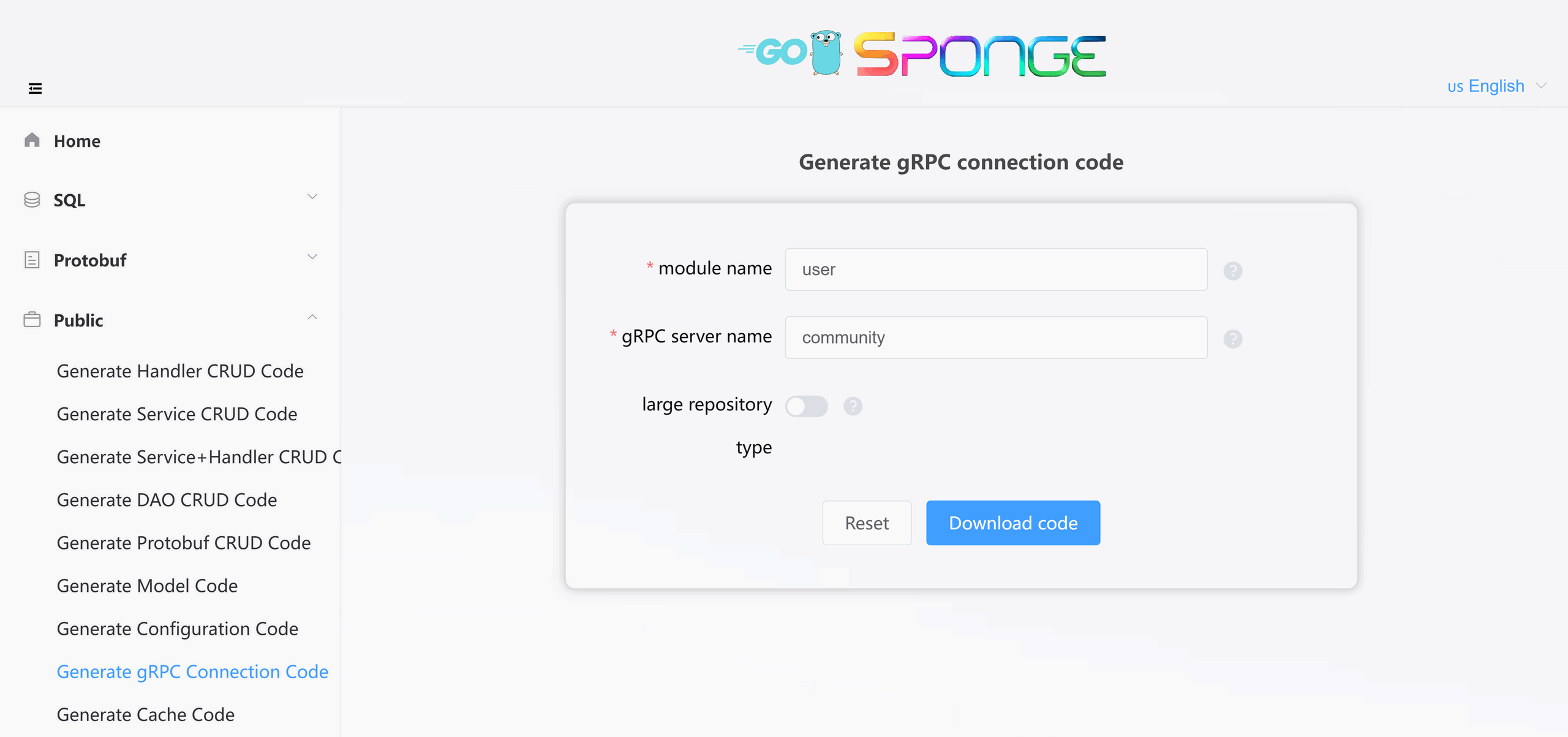

Generate gRPC Service Connection Code

Operation Steps:

- Access the sponge UI interface

- Navigate to [Public] → [Generate gRPC Connection Code]

- Fill in the parameters:

- Module name (required)

- gRPC service name (supports multiple services, separated by commas)

Click the button [Download Code] to generate the gRPC service connection code, as shown in the figure below:

Equivalent Command

# Full command

sponge micro rpc-conn --module-name=user --rpc-server-name=community

# Simplified command (use --out to specify the service code directory, generated code is automatically merged into the specified service directory)

sponge micro rpc-conn --rpc-server-name=community --out=edusysGenerated Code Structure:

.

└─ internal

└─ rpcclient # Contains full client configuration such as service discovery, load balancing, etc.Unzip the code and move the internal directory to the current service code directory.

Example of using generated gRPC connection code in your code:

In actual use, you may need to call APIs from multiple gRPC services to get data. The initialization example code is as follows:

package service

import (

userV1 "edusys/api/user/v1"

relationV1 "edusys/api/relation/v1"

creationV1 "edusys/api/creation/v1"

// ......

)

type user struct {

userV1.UnimplementedTeacherServer

relationCli relationV1.RelationClient

creationCli creationV1.CreationClient

}

// NewUserClient create a client

func NewUserServer() edusysV1.UserLogicer {

return &user{

// Instantiate multiple gRPC service client interfaces

relationCli: userV1.NewRelationClient(rpcclient.GetRelationRPCConn()),

creationCli: userV1.NewCreationClient(rpcclient.GetCreationRPCConn()),

}

}

// ......Configure Target gRPC Service Connection Parameters

Add the following configuration to the configuration file configs/service-name.yml:

grpcClient:

- name: "user" # grpc service name

host: "127.0.0.1" # grpc service address, if service discovery is enabled, this field value is invalid

port: 8282 # grpc service port, if service discovery is enabled, this field value is invalid

registryDiscoveryType: "" # Service discovery, disabled by default, supports consul, etcd, nacosMultiple service configuration example:

grpcClient:

- name: "user"

host: "127.0.0.1"

port: 18282

registryDiscoveryType: ""

- name: "relation"

host: "127.0.0.1"

port: 28282

registryDiscoveryType: ""

- name: "creation"

host: "127.0.0.1"

port: 38282

registryDiscoveryType: ""Tips

For complete configuration options, please refer to the description of the grpcClient field in the configuration file configs/[service name].yml.

Call Target gRPC Service API

After successfully connecting to the target gRPC service, in order to clarify the callable API interfaces, you need to introduce the Go language stub code generated from the proto files. Depending on the service architecture, there are mainly two ways to call:

Mono-repo Architecture

If the target gRPC service was created by sponge and belongs to the same microservice monorepo as the current service (selected "Monorepo Type" when creating the service), you can directly call its API, and there is no cross-service dependency issue.Multi-repo Architecture

If the target gRPC service is located in an independent code repository, you need to solve the problem of cross-service referencing proto files and Go stub code. The following are two common solutions:Solution 1: Use a public Protobuf repository

For microservice systems with a multi-repo architecture, it is recommended to create a dedicated public Git repository (such as

public_protobuf) to centrally manage proto files and their generated Go stub code. A typical directory structure is as follows:· ├── api │ ├── serverName1 │ │ └── v1 │ │ ├── serverName1.pb.go │ │ └── serverName1_grpc.pb.go │ └── serverName2 │ └── v1 │ ├── serverName2.pb.go │ └── serverName2_grpc.pb.go ├── protobuf │ ├── serverName1 │ │ └── serverName1.proto │ └── serverName2 │ └── serverName2.proto ├── go.mod └── go.sumCalling steps:

Copy the

protobufdirectory from the public repository to thethird_partydirectory of your local service. ├── third_party │ └── protobuf │ ├── serviceA │ │ └── serviceA.proto │ └── serviceB │ └── serviceB.protoImport the target proto in your local proto file using

import(e.g.,import "protobuf/serviceA/serviceA.proto";)Call the API of the target gRPC service in your local service.

Note

Ensure that the proto files under

third_party/protobufare synchronized with the public repository.Solution 2: Copy the target service's proto files to your service and generate Go language stub code

Based on how the target service was created, different processing flows are adopted:

Services not created by sponge

- Manually copy the target service's proto files to the

api/target-service-name/v1directory - Need to manually modify the

go_packagepath definition in the proto file

- Manually copy the target service's proto files to the

Services created by sponge

Integrated through automated commands:

# Copy the target service's proto files (supports multiple service directories, separated by commas) make copy-proto SERVER=../target_service_dir # Generate Go stub code make protoAdvanced options:

- Specify proto files:

PROTO_FILE=file1,file2 - Automatic backup: Overwritten files can be found in

/tmp/sponge_copy_backup_proto_files

- Specify proto files:

Test Cross-Service gRPC API Calls

Start dependent services:

- gRPC services created by sponge: Execute

make run - Other gRPC services: Run according to their actual startup commands

- gRPC services created by sponge: Execute

Start your service, execute the commands:

# Generate and merge api related code make proto # Compile and run the service make run

To test cross-service gRPC API calls, please refer to the section above: Testing gRPC+HTTP Service API.

Service Configuration Description

gRPC services created by sponge provide rich configurable components. You can flexibly manage these components by modifying the configs/service-name.yml configuration file.

Component Management Description

You can add, replace custom gRPC interceptors in internal/server/grpc.go.

Components Enabled by Default

| Component | Feature Description | Configuration Documentation |

|---|---|---|

| logger | Logging component • Default terminal output • Supports console/json format • Supports log file splitting and retention | Logger Configuration |

| enableMetrics | Prometheus metrics collection • Default route /metrics | Monitoring Configuration |

| enableStat | Resource monitoring • Records CPU/memory usage per minute • Automatically saves profile if threshold is exceeded | Resource Statistics |

| database | Database support • MySQL/MongoDB/PostgreSQL/SQLite | gorm Configuration mongodb Configuration |

Components Disabled by Default

| Component | Feature Description | Configuration Documentation |

|---|---|---|

| cacheType | Cache support (Redis/Memory) | redis Configuration |

| enableHTTPProfile | Performance analysis (pprof) • Default route /debug/pprof/ | - |

| enableLimit | Adaptive request rate limiting | Rate Limit Configuration |

| enableCircuitBreaker | Service circuit breaker protection | Circuit Breaker Configuration |

| enableTrace | Distributed tracing | Tracing Configuration |

| registryDiscoveryType | Service registration and discovery • Consul/Etcd/Nacos | Service Registration and Discovery Configuration |

| grpcClient | gRPC client connection settings • Service name, address, port • Service discovery type • Timeout settings, load balancing • Certificate verification, token verification | gRPC Client Configuration |

Configuration Update Process

If you add or change field names in the configuration file configs/service-name.yml, you need to update the corresponding Go code (internal/config/xxx.go) by executing the command in the terminal:

# Regenerate configuration code

make update-configNote

If you only modify the field values in the configuration file, you do not need to execute the make update-config command, just recompile and run.

Tips

To learn more about components and configuration details, click to view the Components and Configuration section.