Creating gRPC Server Based on Protobuf

Overview

Create gRPC server based on Protobuf provides a complete solution for general gRPC service development. This solution allows developers to focus only on implementing business logic, while other basic code (such as framework code, interface definitions, etc.) is automatically generated by sponge. It is worth mentioning that business logic code can also be generated with the help of sponge's built-in AI assistant, further reducing manual coding workload.

Tips

Another gRPC service development solution, Create gRPC server based on sql (see Based on SQL), only uses the built-in ORM components. This solution, however, allows you to choose between using built-in ORM components or custom ORM components. This is the main difference between the two solutions for creating gRPC services; everything else is the same.

Applicable Scenarios: Suitable for general gRPC service projects.

Create gRPC server based on Protobuf defaults to no ORM components and supports development using either built-in ORM components or custom ORM components. Below, we will take the built-in ORM component gorm as an example to detail the steps for developing a gRPC service.

Tips

Built-in ORM components: gorm, mongo, supporting database types mysql, mongodb, postgresql, sqlite.

Custom ORM components: For example, sqlx, xorm, ent, etc. When choosing a custom ORM component, developers need to implement the relevant code themselves. Click to see the chapter: Instructions on using custom ORM components.

Preparations

Environment Requirements:

- sponge is installed

- mysql database service

- Database table structure

- proto file, for example user.proto.

Tips

Generating service CRUD code depends on the mysql service and database tables. You can start the mysql service via the docker script and then import the example SQL.

To quickly get familiar with the basic syntax rules of Protobuf, please refer to the Protobuf documentation.

Creating gRPC Server

Operation Steps

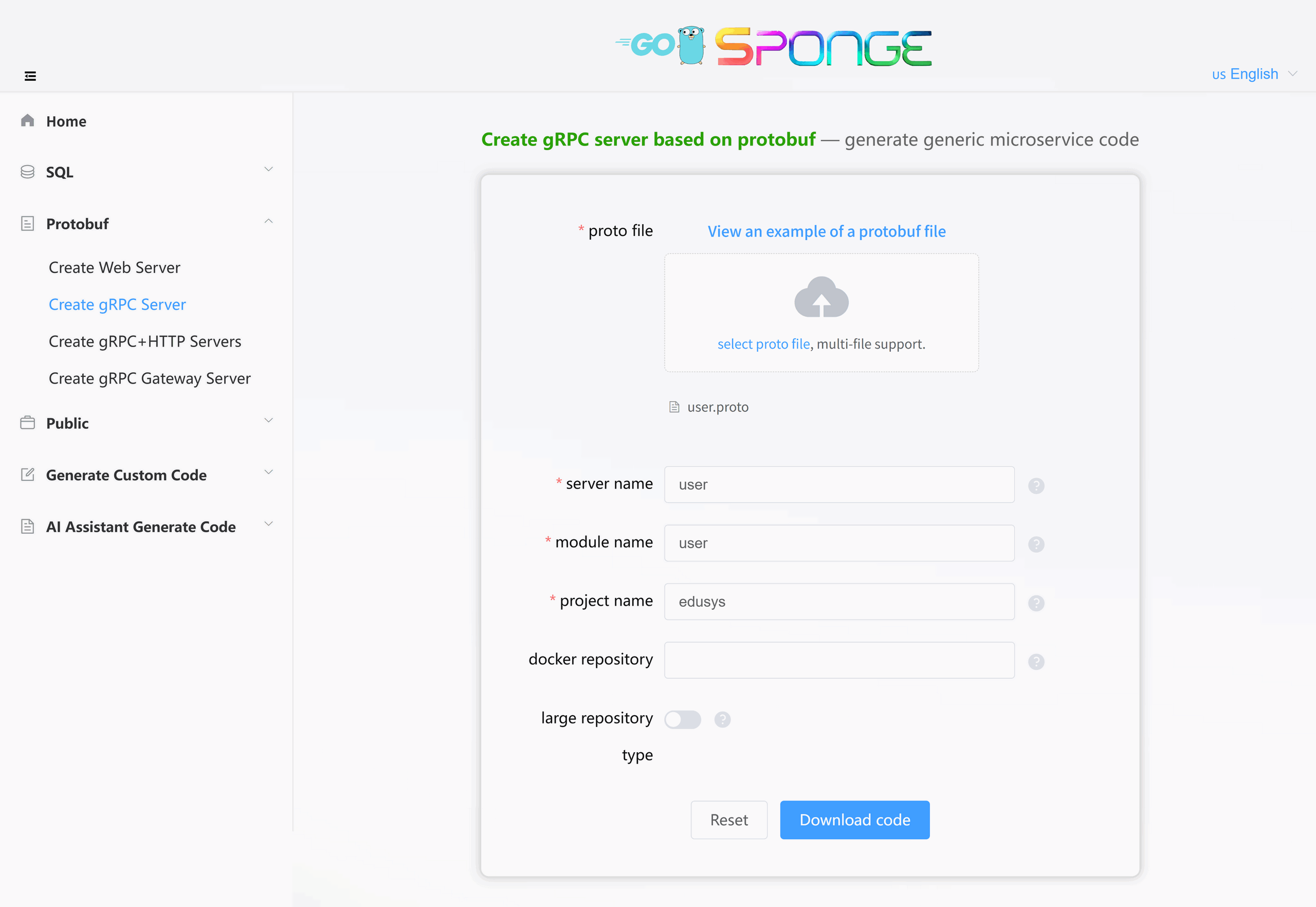

Execute the command sponge run in the terminal to enter the code generation UI:

- Click on the left menu bar [Protobuf] → [Create gRPC Server];

- Select the proto file(s) (multiple selection is allowed);

- Then fill in other parameters. Hover the mouse over the question mark

?to view parameter descriptions.

Tips

If you enable the Large repository type option when filling in parameters, this setting must be maintained for all subsequent relevant code generations.

After filling in the parameters, click the Download Code button to generate the gRPC service code, as shown in the figure below:

Equivalent Command

sponge micro rpc-pb --module-name=user --server-name=user --project-name=edusys --protobuf-file=./user.protoTips

The generated gRPC service code directory is named by default in the format

service name-type-time. You can modify this directory name as needed.The system will automatically save the records of successfully generated code, making it convenient for the next code generation. When the page is refreshed or reopened, some parameters from the previous generation will be displayed.

Directory Structure

The generated code directory structure is as follows:

.

├─ api # API protocol definition directory (proto/OpenAPI, etc.)

│ └─ user # Service name (user service)

│ └─ v1 # API version v1 (stores proto files and generated pb.go, etc.)

├─ cmd # Application entry directory

│ └─ user # Service name (user service)

│ ├─ initial # Initialization logic (e.g., config loading, service setup, etc.)

│ └─ main.go # Main program entry file

├─ configs # Configuration file directory (YAML format config templates)

├─ deployments # Deployment-related files

│ ├─ binary # Binary deployment scripts/configs

│ ├─ docker-compose # Docker Compose orchestration files

│ └─ kubernetes # Kubernetes deployment manifests

├─ docs # Project documentation (API docs, design docs, etc.)

├─ internal # Internal implementation code (not exposed externally)

│ ├─ config # Config parsing and struct definitions

│ ├─ ecode # Error code definitions

│ ├─ server # Service startup and lifecycle management

│ └─ service # Business logic layer

├─ scripts # Utility scripts (e.g., code generation, build, run, deploy, etc.)

├─ third_party # Third-party Protobuf dependencies/tools

├─ go.mod # Go module definition file (declares dependencies)

├─ go.sum # Go module checksum file (auto-generated)

├─ Makefile # Project build automation script

└─ README.md # Project description documentThe gRPC service code generated by sponge adopts a layered architecture (using built-in ORM components). The complete call chain is as follows:

cmd/user/main.go → internal/server/grpc.go → internal/service → internal/dao → internal/model

Among these layers, the service layer is mainly responsible for API handling. If more complex business logic needs to be handled, it is recommended to add an additional business logic layer (such as logic or biz) between the service and dao layers. For details, please click to see the Code Layered Architecture chapter.

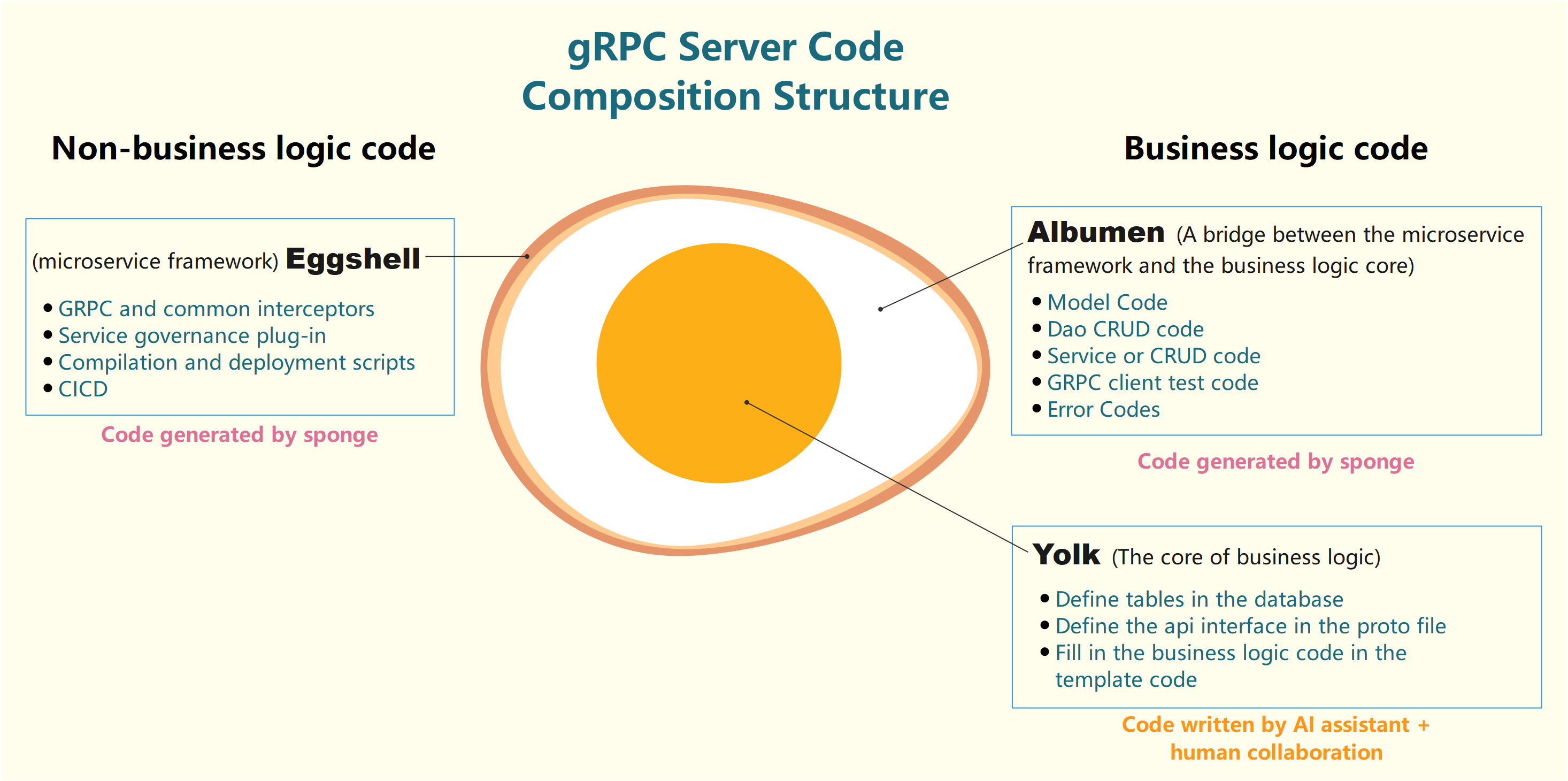

Code Structure Diagram

The created gRPC service code adopts the "egg model" architecture:

Testing gRPC Service APIs

Unzip the code file, open a terminal, switch to the gRPC service code directory, and execute the commands:

# Generate and merge API related code

make proto

# Open the generated code file (e.g., internal/service/user.go)

# Fill in business logic code, support manual writing or using the built-in AI assistant

# Compile and run the service

make runmake proto command explanation

Usage Recommendation

Execute this command only when the API description in the proto file changes, otherwise skip this command and directly runmake run.This command performs the following automated operations in the background

- Generate

*.pb.gofiles - Generate error code definitions

- Generate gRPC client test code

- Generate API template code

- Automatically merge API template code

- Generate

Safety Mechanism

- The original business logic is preserved during code merging

- Code is automatically backed up before each merge to:

- Linux/Mac:

/tmp/sponge_merge_backup_code - Windows:

C:\Users\[Username]\AppData\Local\Temp\sponge_merge_backup_code

- Linux/Mac:

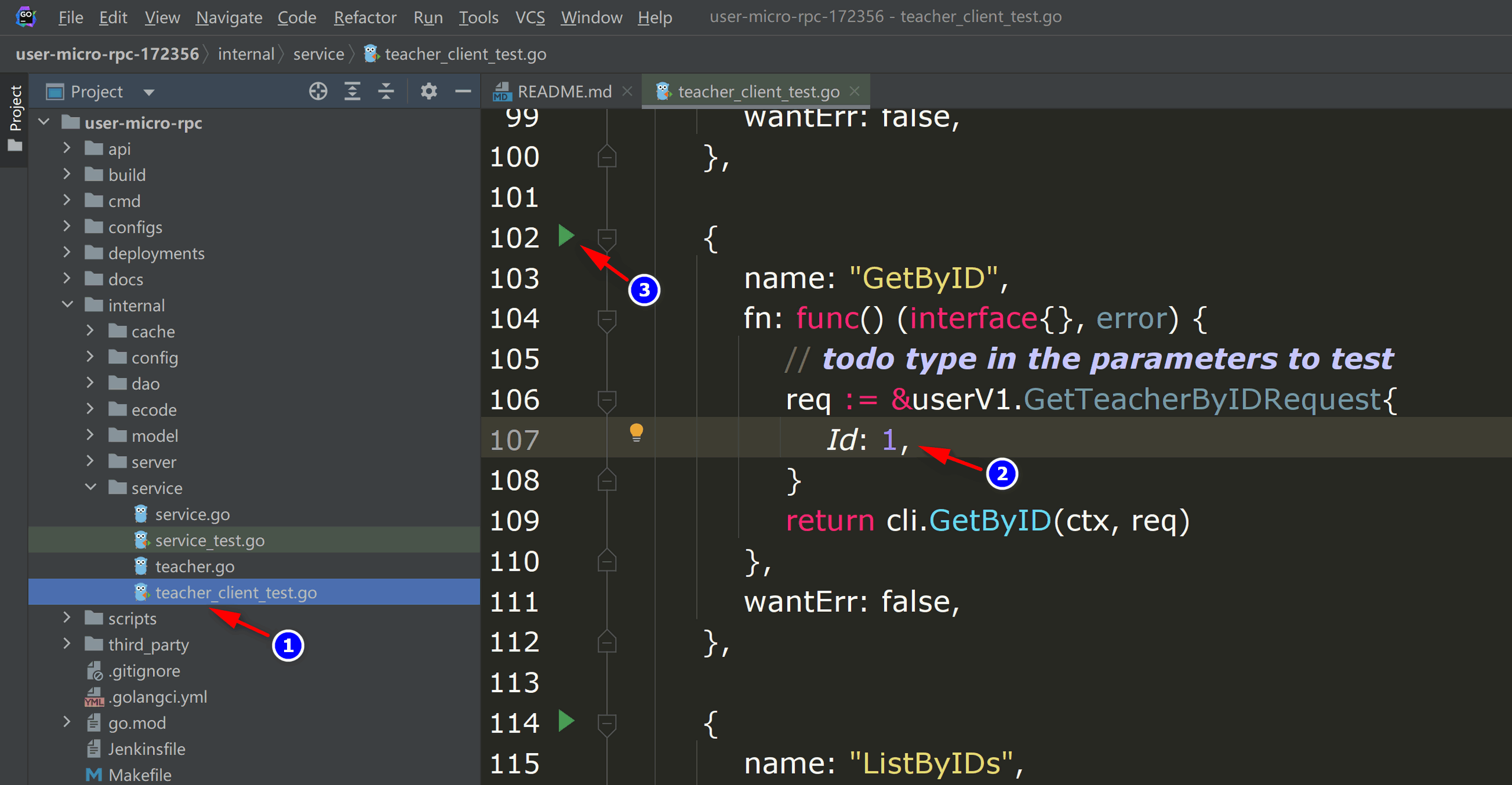

Testing Method 1: IDE (Recommended)

Open Project

- Load the project using an IDE such as

GolandorVSCode.

- Load the project using an IDE such as

Execute Test

- Navigate to the

internal/servicedirectory and open the file with the suffix_client_test.go. - The file contains test and benchmark functions for each API defined in the proto.

- Modify the request parameters (similar to testing in a Swagger interface).

- Run the test through the IDE, as shown in the figure below:

micro-rpc-test - Navigate to the

Testing Method 2: Command Line

Enter Directory

cd internal/serviceModify Parameters

- Open the

xxx_client_test.gofile - Fill in the request parameters for the gRPC API

- Open the

Execute Test

go test -run "TestFunctionName/gRPCMethodName"Example:

go test -run "Test_service_teacher_methods/GetByID"

Adding CRUD API

Click to see the chapter: Adding CRUD API.

Developing Custom API

Click to see the chapter: Developing Custom API.

Cross-Service gRPC API Call

Click to see the chapter: Cross-Service gRPC API Call.

Service Configuration Explanation

Click to see the chapter: Service Configuration Explanation.